Hugging Face Acquires Pollen Robotics to Bring Open-Source AI to Humanoids

A top AI platform accelerates its move into physical intelligence by joining forces with a French robotics team known for building friendly, modular humanoids

When Hugging Face acquired Pollen Robotics this April, it wasn’t just a company merger—it was a decisive bet on the physical future of artificial intelligence.

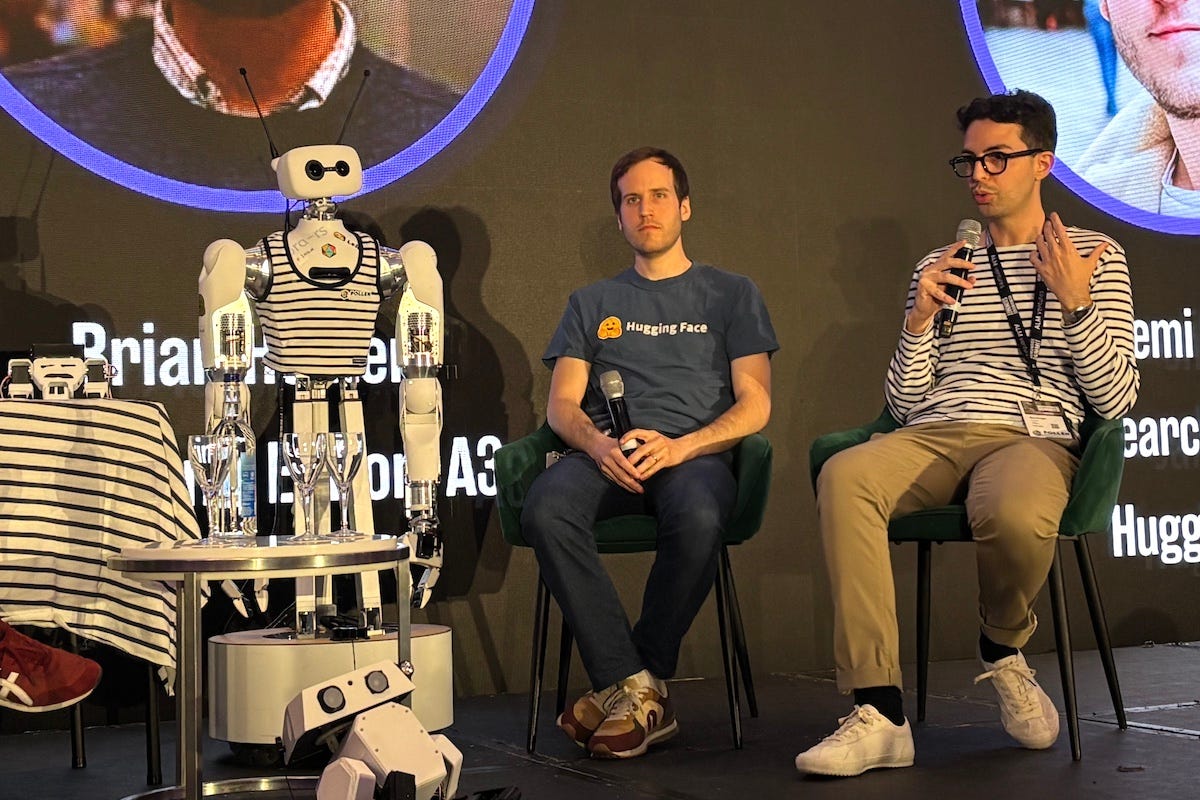

“We started the robots project to lower the barrier to entry for AI in robotics,” Remi Cadene, the former Tesla Optimus scientist who now leads Hugging Face’s robotics efforts, shared his views at the Humanoids Summit in London on May 29, 2025.

“It made sense for Hugging Face, because it's a big AI platform. And applying AI to robotics means you need a lot of data and some infrastructure. You need to build communities for people to meet around hackathons—and that’s what Hugging Face really knows a lot about.”

For nearly a decade, Hugging Face has been a go-to hub for open-source machine learning models. Its hosting infrastructure, collaborative tools, and AI app “Spaces” are now used by over 7 million developers. But the company sees a future that goes beyond screen-based interfaces—and directly into the physical world.

Cadene and his team had already begun testing robot AI models in 2024, including an early deployment in Bordeaux, France, where a robot successfully grasped objects like apples. The Pollen Robotics team happened to be nearby.

“Over the years, we were able to work closely with Pollen on many projects,” Cadene said. “At some point, it made a lot of sense. All our collaboration was really amazing.”

Founded in 2016, Pollen gained early recognition for its approachable, open-source humanoid platform, Reachy. When the second-generation model, Reachy 2, launched in October 2024, Hugging Face was already deeply involved in its development.

“The way the synergy between our two companies works is that we are experts on the hardware,” said Santiago Pavon, Pollen’s Growth Lead. “And Hugging Face is an expert on the AI part. So if you really wanted to have a strong embodied AI robot, we really needed to have strong knowledge on how to put AI on the embodied part.”

That mutual respect and shared mission—democratizing robotics through open tools—set the stage for the acquisition. With Reachy 2 already used in labs like Cornell and Carnegie Mellon, the new partnership aims to expand the open-source humanoid ecosystem with tools that are both research-grade and beginner-friendly.

Building Robots Like a Community Builds Code

Pollen Robotics’ modular approach to development came from necessity and listening.

“Pollen started really on the creativity side,” said Pavon. “So two former PhD students are trying to have fun with robotics. They saw a big potential with 3D-printed, open-source robotics.”

The original robot, named Poppy, led to a printed robotic arm. Then came a camera.

“And then some people in the community asked us, ‘Why don't you have a camera?’ Because it's just an arm. So then we created a camera,” Pavon recalled. “And then other people came and said, ‘Why not a head?’ Because I'm not, I can't communicate. It's just a camera. So then we created the head, and then the torso.”

Each part of Reachy was created based on requests from researchers and hobbyists. “We try not to push to create like a really strong robot and push it to sell,” he added. “We start by asking the users: what do you want?”

The resulting robot is friendly, modular, and distinctly unthreatening. With animated eyes, expressive gestures, and omniwheels for mobility, Reachy 2 is designed for transparency and emotional relatability.

“Sometimes he tries to work on stuff, sometimes it works, sometimes it doesn't,” Pavon said. “So also to lower the expectation, because we don't think that humanoid robots can do everything right now.”

This design philosophy carries over to user interactions.

“If people are afraid of robots, if people don't want to interact with robots, then we're not doing things right,” Pavon said. “Instead of fear, we want people to feel empathy—like, ‘He's trying.’”

Under Hugging Face, the Pollen team 30 continues to work from Bordeaux, with frequent trips to Paris for joint prototyping and testing.

“It's like we were already the same company,” Pavon said.

Cadene agreed: “It’s a lot about being bottom-up. The engineers and researchers know how to have an impact. They know when they need to work together to accomplish something.”

LeRobot and the $100 Robotic Arm

While Reachy 2 offers a full humanoid platform, Hugging Face’s LeRobot division is focused on making robotics accessible even at the lowest cost.

Created in 2024, LeRobot is an open-source Python library designed to bridge AI and hardware.

“We had this opportunity to write a lot of code in Python,” said Cadene. “Many people in the AI community were able to adopt it to start robotics quickly.”

The library includes middleware to control motors, cameras, and microphones, and a dataset module that compresses recordings into video format, dramatically reducing file size and enabling easier sharing.

“The dataset is 10 to 100 times smaller than what used to be the case in academia,” Cadene said.

In October 2024, LeRobot released its first hardware product: the SO-100, a 3D-printable robotic arm developed with French startup The Robot Studio. This spring, that evolved into the SO-101—an upgraded, camera-equipped arm with smoother motors and better balance. Priced between $100 and $500 depending on configuration, the SO-101 can be trained with reinforcement learning to perform basic tasks like picking and placing.

“We want robots that are affordable, easy to use, and easy to build,” Cadene said. “That’s how we think we can unlock the robotics ecosystem—not just through research, but through startups and DIY communities.”

LeRobot has also teamed up with NVIDIA on open-source training workflows and datasets, and will soon release new models that include audio processing, vision-language-action learning, and reinforcement-trained agents for manipulation tasks.

Robots That Learn With the World

Looking ahead, Hugging Face and Pollen are betting on embodied learning, which would give robots the ability to interact, adapt, and improve based on real-world feedback.

Cadene laid the roadmap: “We scale the amount of data, open source. We train foundational models and compact and dynamic models. Then we include some other modalities, like sound. And also we add the capacity to interact with the world and to learn from this interaction—so it’s like reinforcement learning in the real world.”

Hugging Face’s global robotics hackathon in June will fully display that approach. With events hosted across every continent, participants will build and test robot behaviors using Hugging Face tools and platforms.

“I expect more people to join the community, to join the fun,” Cadene said.

For Pavon, the integration with Hugging Face means freedom to innovate.

“We unleash this creativity and innovation that sometimes we see gets lost with private, investor-focused projects,” he said. “Right now, we’re trying to work on different products simultaneously. People are really open—how they build and create stuff.”

Ultimately, both teams are driven by the same conviction: that robotics should be open, understandable, and human-centered.

“My job,” said Cadene, “is to think about the future and to accelerate it—so that it goes for the good.”