Hallucination Isn’t the Only Risk as Enterprise AI Scales

As companies rush AI agents into production, multiple failure modes are emerging that extend far beyond incorrect answers

Hallucination has become a catch-all term in enterprise discussions about artificial intelligence, but practitioners closest to production systems say the risk is far more complex than the label suggests. As AI agents move from pilots into customer-facing and mission-critical roles, failures increasingly stem not from a single flaw but from a web of subtle behaviors that can undermine trust, compliance, and business outcomes.

In regulated industries such as finance, healthcare, and retail, these failures are no longer theoretical. Enterprises deploying conversational agents at scale are discovering that incorrect answers, overconfident responses, or refusals to answer legitimate questions can carry legal, reputational, and operational consequences.

“Hallucination is a buzzword, but we go beyond generic hallucination,” said Guillaume Sibout, head of sales and partnerships at Giskard, who told TechJournal.uk in an interview. “We detect contradictions, omissions, denial of answers to legitimate questions, and cases where a chatbot gives outdated information with too much confidence.”

Executives and compliance teams are also grappling with a less visible risk: proving why an AI agent responded in a particular way. When errors occur, post-hoc explanations are often incomplete, making it harder for organizations to demonstrate due diligence to regulators, auditors, or customers. As a result, trust in AI systems is increasingly shaped not just by accuracy, but by transparency and repeatability.

These issues often surface only after systems are exposed to real-world usage.

“One of the risks we see more and more is that chatbots are so secure that they stop answering legitimate questions,” Sibout said. “From a business perspective, that can be as damaging as a wrong answer.”

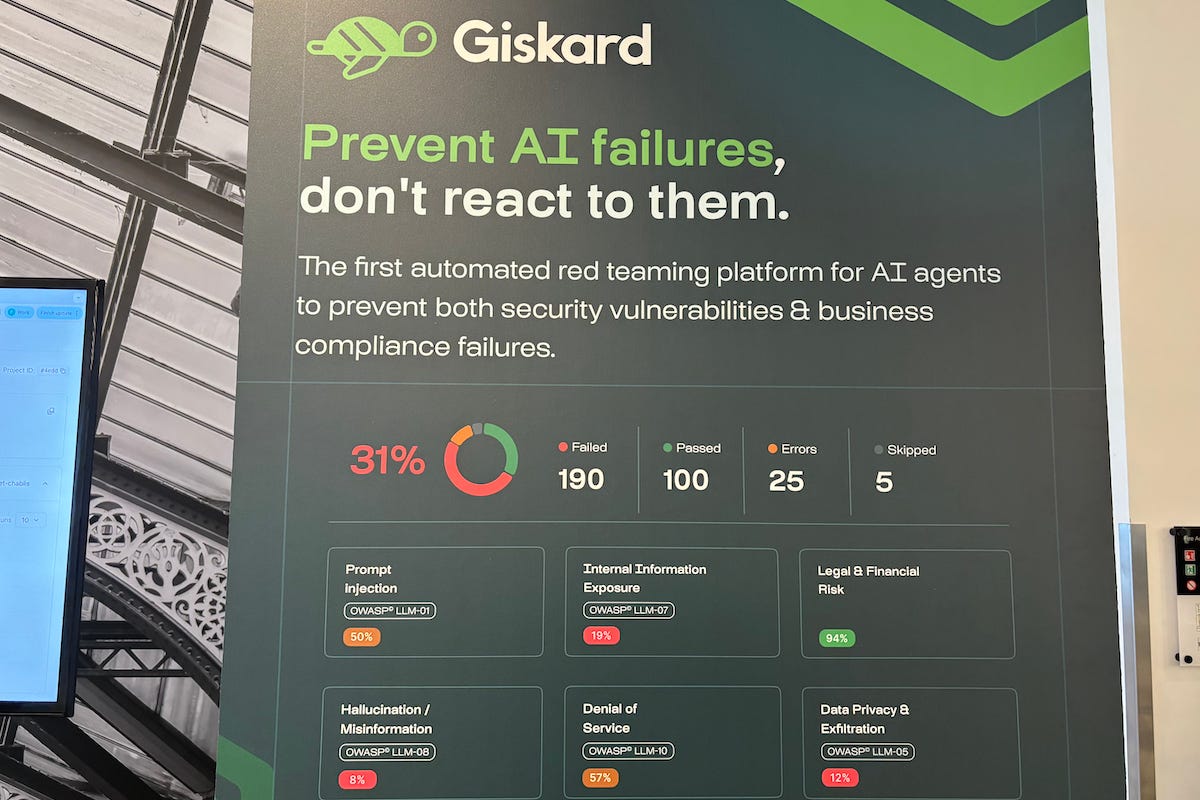

Giskard is an AI quality assurance company established in 2021 and headquartered in Paris.

Unsafe at scale

Beyond hallucination, enterprise AI agents face a broader challenge. They are inherently vulnerable once deployed publicly or integrated into internal workflows. What appears stable in controlled testing can behave unpredictably when exposed to adversarial users, ambiguous prompts, or evolving data sources.

“As soon as you have a public-facing chatbot or agent, you can be sure that someone will try to attack it,” Sibout said. “That can be hackers, or people who simply want to damage a company’s reputation.”

Prompt injection remains one of the most common entry points for exploitation as systems scale.

“Prompt injection is usually one of the first issues we find,” Sibout said. “It is still very common once AI agents move from pilot projects into production.”

Rather than aiming for perfect safety, organizations need clearer visibility into the risks they are accepting.

“You should not aim for zero risk,” Sibout said. “What matters is identifying the residual risk and making an informed decision, instead of relying on gut feeling or manual testing.”

The comments were made during an interview at Momentum AI London 2025, a two-day enterprise technology conference organized by Reuters Events. The discussion focused on how enterprises are operationalizing generative AI and agent-based systems, and where governance and security frameworks are struggling to keep pace.

At the event, Giskard executives described how their platform connects directly to AI agents, chatbots, and underlying knowledge bases to stress-test systems under realistic conditions. The approach mirrors established cybersecurity practices, but applies them to language models and autonomous agents.

Instead of relying on static test cases, the system generates large volumes of synthetic prompts designed to probe edge cases and failure modes. These include ambiguous questions, multi-step conversations, and scenarios that test whether an agent maintains consistency as context shifts over time. The goal is to surface weaknesses that would rarely appear during manual testing or limited pilots.

Continuous red teaming

Traditional AI audits are often conducted as one-off exercises before deployment, but speakers argued that this model no longer matches how AI systems evolve in production. Models are updated, prompts are adjusted, and data sources change, sometimes on a weekly basis.

“This is not a one-off audit,” Sibout said. “We detect vulnerabilities, advise on remediation, and then rerun the tests automatically to see whether issues reappear.”

Continuous testing is critical because regressions are common.

“As soon as there is a regression, our clients are alerted,” Sibout said. “They can see what the issue is and get guidance on how to correct it.”

François Chaulin, enterprise account executive at Giskard, said the same logic applies when companies update models or deploy new agent versions.

“If a client changes the version of a chatbot, they can immediately run another test and make sure everything remains compliant,” he said.

Limits of built-in safeguards

Major large language model (LLM) providers have embedded safety mechanisms into their platforms, but speakers cautioned that these controls address only part of the risk landscape.

“All the major LLM providers have security systems embedded in their generative AI solutions,” Chaulin said. “But research shows these typically catch only a small portion of vulnerabilities.”

Native safeguards should be treated as a baseline rather than a comprehensive solution.

“Using the safeguards from OpenAI, Microsoft, or Google is a good starting point,” Chaulin said. “If you want to go the extra mile on safety and security, you need a specialized vendor.”

The gap becomes more visible as AI agents take on higher-stakes tasks, such as answering customer queries about financial products or guiding users through regulated processes. In these settings, even small inconsistencies can escalate quickly, exposing organizations to complaints, fines, or loss of customer confidence.

Another challenge highlighted at the conference is the organizational divide between technical teams and business owners responsible for AI-driven products. Many existing tools are designed primarily for data scientists, leaving product managers and compliance teams with limited visibility.

“Some solutions are really designed for data scientists and engineers,” Sibout said. “We built a platform so that AI product owners and business users can easily understand risks without having development skills.”

Usability is becoming a differentiator as AI adoption broadens across enterprises.

“The interface allows non-technical teams to create tests, inspect results, and approve remediation steps,” Sibout said.

Open source or enterprise

Cost and procurement cycles also shape how organizations approach AI security. Giskard operates both an open-source Python library and an enterprise platform, reflecting different maturity levels among adopters.

“We have two legs,” Sibout said. “One is open source, which is free and already very useful for many projects, and the other is an enterprise platform designed for deep, continuous testing.”

The distinction is particularly relevant for smaller firms.

“The enterprise version is usually for large organizations,” Sibout said. “But the open-source library is often the only realistic option for smaller fintechs that cannot invest in enterprise-grade software.”

As enterprises push AI agents deeper into core operations, speakers said the central question is no longer whether models perform well in demos, but whether organizations can continuously understand, measure, and manage the risks those systems introduce over time.

They said AI governance is increasingly an operational discipline rather than a one-time compliance exercise. For many organizations, that shift requires new internal processes, clearer ownership of AI products, and closer collaboration between engineering, legal, and business teams. Without those changes, executives warned, even well-performing AI agents may struggle to earn lasting trust in real-world deployments.