Extend Robotics Unveils XR-Based Platform to Propel Humanoid Automation

A new teleoperation platform promises to speed industrial humanoid adoption by merging immersive XR controls with embodied AI training

A bold new approach is emerging to simplify how humanoid robots are trained and deployed, spotlighting a cutting-edge teleoperation system designed to fast-track the adoption of intelligent automation. This model prioritizes immersive control, seamless data capture, and rapid AI training, allowing organizations to unlock humanoid capabilities more efficiently and safely than conventional programming methods.

At the center of this vision is a software platform developed by Extend Robotics, which uses extended reality (XR)—a collective term for virtual and augmented reality technologies—to let operators remotely manipulate robots as if they were physically present.

At the 2025 Humanoids Summit in London, Extend Robotics described how this method addresses one of robotics’ biggest barriers: the scarcity of quality training data.

While internet-scale datasets have powered breakthroughs in large language models, comparable datasets for robotic systems do not exist, limiting progress and slowing the arrival of a productive humanoid workforce.

“If you spend just one day with a roboticist, you quickly see that, unlike AI models trained on vast internet data, robots have almost no usable data to learn from,” said Chang Liu, Founder and CEO of Extend Robotics.

He noted that the company’s Advanced Mechanics Assistance System (AMAS) addresses this gap by allowing operators anywhere in the world to control dexterous robots via a VR headset, while simultaneously capturing synchronized sensor, video, and motion data for machine learning.

“Suddenly, your teleoperated robots become AI-driven autonomous robots, and you are still in control,” he added.

The AMAS system also supports synthetic data generation by linking operators to Nvidia-powered virtual environments. These digital twins allow companies to simulate tasks, label datasets, and train state-of-the-art embodied AI models—such as action-function transformers—before deploying them back to physical robots.

Liu showcased a trial in which AMAS trained a robot to autonomously complete a complex industrial plugging task—an intricate manipulation challenge that traditionally resists full automation.

He said the long-term vision is a self-improving data loop: users start with teleoperation, progressively add real-world edge cases from deployed robots, and use this ever-growing dataset to fine-tune their AI models. This would allow robotic systems to gain autonomy rapidly while remaining under human oversight.

Commercial Deployments

Azmat Hossain, Robotics Solutions Developer at Extend Robotics, expanded on the platform’s commercialization strategy. He emphasized productivity, safety, and user accessibility as its three central pillars.

“There are still a lot of use cases where people are doing jobs that do not make sense for them. We can solve these problems with robots,” he said.

A major pilot project is underway with Leyland Trucks, a legacy British manufacturer.

Leyland engaged Extend Robotics to address hazardous tasks such as truck painting and high-voltage component insertion. These processes are currently performed manually despite their safety risks. The company has completed initial trials and is entering a second deployment phase, aiming to let remote operators control humanoid robots from safe locations while progressively transitioning to AI-driven automation.

Extend Robotics is also participating in an Innovate UK-funded project with Queen Mary University of London (QMUL) and Saffron Grange Vineyard.

This three-year initiative aims to reduce reliance on seasonal labor, cut costs, and boost crop yields by enabling remote operation and training of agricultural robots, even from overseas locations.

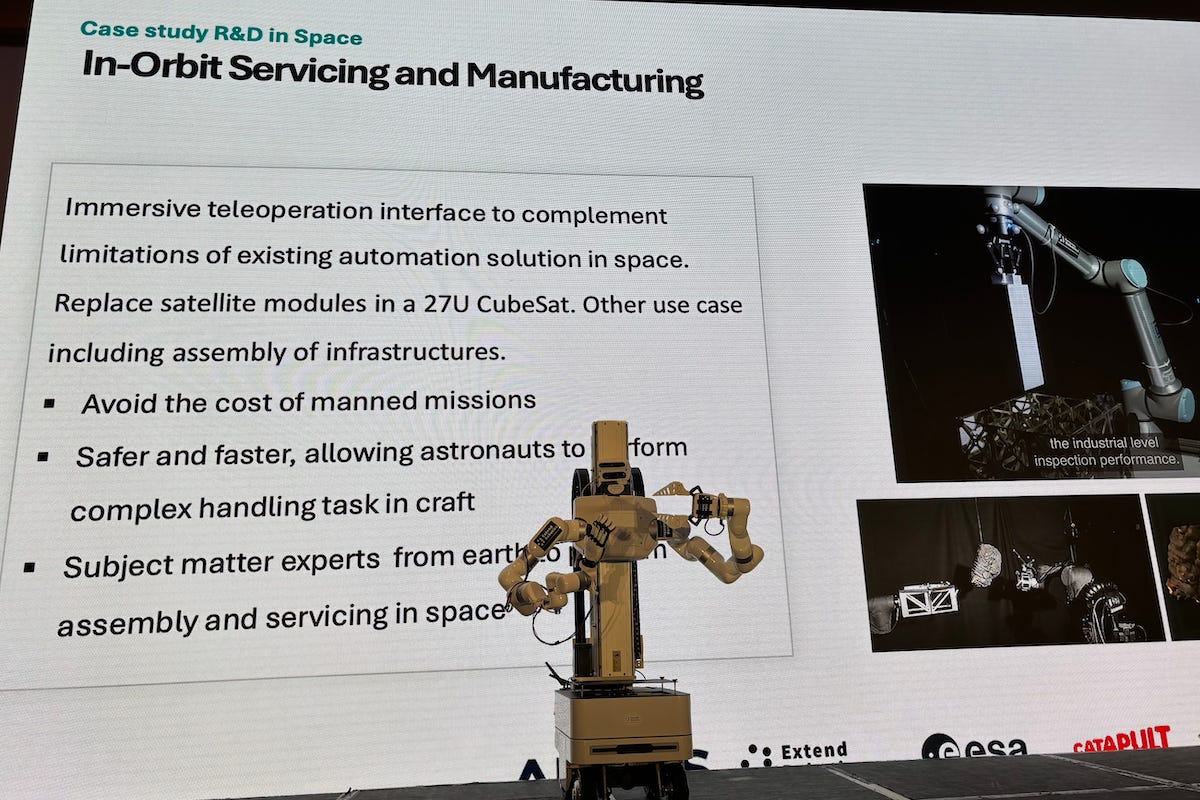

The company is further collaborating with Airbus on high-risk space operations and with AtkinsRéalis on nuclear waste handling tasks.

Hossain said industry feedback has been encouraging, noting that manufacturers are drawn to the system’s low training barrier.

“Automation has been evolving for over a decade, but it is still difficult to use. It needs to be much more intuitive so non-technical people can start using it,” he said.

Immersive User Interface

Liu demonstrated the AMAS interface using a Meta Quest 3 headset to control a simulated robot. He explained that the platform delivers real-time 3D visuals, giving operators depth perception and finger-level precision. Optional haptic gloves provide tactile feedback, helping non-technical users control robots naturally and intuitively.

He noted that the interface can be connected to a variety of robotic systems, from collaborative robotic arms to full humanoid platforms, while also supporting speech recognition and full-body embodiment mode.

With growing industrial interest, Extend Robotics is now raising capital to expand its team and infrastructure.

“We really want to scale up our team and services to stay ahead of the competition,” Liu said, inviting prospective partners to visit the company’s Reading headquarters to explore investment opportunities.

Positioning itself as an enabling software layer rather than a hardware manufacturer, the company aims to accelerate the global rollout of humanoid automation by offering a streamlined pipeline from teleoperation to autonomous execution.

If successful, AMAS could democratize robot training and deliver rapid productivity gains across manufacturing, agriculture, space, and nuclear sectors—ushering in a new era where human expertise can be leveraged at scale through immersive, remotely controlled machines.