Enterprise AI Hits Trust Wall as Investors Seek Predictability

A panel discussion showed explainability now gates adoption, pushing capital toward infrastructure and away from shallow applications

Artificial intelligence (AI) systems are rapidly moving from experimentation to deployment. Still, a growing number of executives and investors argue that the industry’s most significant constraint is not compute, data, or capital. Instead, the limiting factor is trust: whether AI can produce outcomes that are explainable, predictable, and suitable for real-world decision-making.

As AI spreads into regulated and mission-critical environments, questions around determinism, transparency, and accountability have become central. Enterprises may be impressed by generative capabilities, but without confidence in how results are produced, many organizations remain cautious about embedding AI deeply into workflows where errors carry legal, financial, or human consequences.

“AI is still evolving, and I would argue that we’re still in the alchemy phase of the process and yet to become fully scientific. Every time you put in an input and get an output, it’s not deterministic; it’s based on probability. That lack of determinism creates uncertainty and a lack of predictability, which impedes enterprise applications,” said Ilya Paveliev, managing director at UOR Foundation.

“The next questions are why does it do it, what is the context, and how does it do it—what is the deterministic path of getting to the answer,” he said. “As soon as we can answer those questions, we will have more trust, and trust is the main bottleneck right now that prevents wide-scale adoption.”

The implications are both commercial and technical. If enterprises cannot rely on consistent outputs, they struggle to justify deeper deployment, and investors struggle to underwrite durable differentiation.

That tension is now shaping where value accrues across the AI stack, with more disciplined capital favoring durable demand, defensible economics, and systems that can be audited under regulatory scrutiny.

Trust over Hype

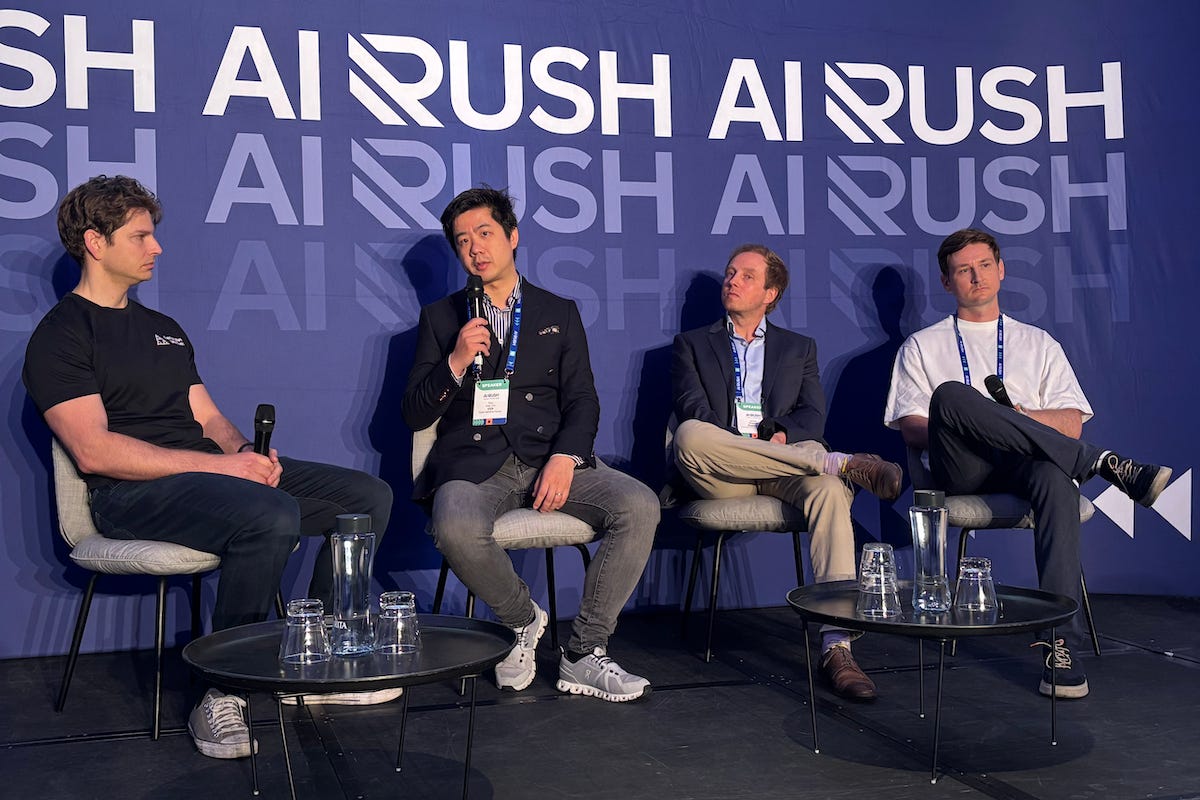

Those themes surfaced at AI Rush in London earlier this year, where Lex Sokolin, managing partner at Generative Ventures, moderated a panel on “AI in Real Practice: What’s Working Now vs. What’s Coming Next?”

Other speakers included Xiao-Xiao Zhu, digital operating partner at KKR (currently President of Jupiter Exchange), and James Newman, managing partner and fund manager at Republic.

Sokolin framed the discussion around a disconnect between technical progress and commercial readiness, arguing that the industry has proven what AI can generate but not yet what it can be trusted to do repeatedly in production.

That concern resonated with the panel of investors. Zhu said uncertainty around reliability and economics makes it difficult to underwrite application-layer AI companies over a typical private-equity horizon, as rapid model turnover undermines confidence in long-term defensibility.

“Every month, every two months, there’s a foundational shift happening,” Zhu said. “Someone comes up with a new model that just completely wipes out everyone else, and it’s extremely difficult as a financial investor to develop a view of five-year financials.”

Newman approached trust from the perspective of product depth rather than model architecture, warning that many AI products struggle because they deliver fluent responses without meaningful operational impact.

“A lot of copilots, or chat-based integrations into systems, are pretty shallow,” Newman said. “It depends on the data set it’s sat on and the depth at which it provides answers that are really creating efficiencies.”

Against that backdrop, Paveliev laid out a practical framework for enterprise adoption built around three dimensions of trust. He argued that enterprises need clarity on what an AI system knows, whether it produces consistent results, and whether it can operate within defined constraints.

Paveliev’s three dimensions of trust:

Data (context awareness): Whether an AI system can access complete context, rather than relying on fragmented silos across different systems and data modalities. “Is AI fully context-aware of the data, or does the data live in fragmented silos in different modalities?”

Determinism (explainability): Whether a system can explain how an answer is calculated and reliably reach the same conclusion from the same input. Large language models (LLMs) use a probabilistic token approach, which means it’s almost impossible to come to the same conclusion with the same input and increases the risk of probabilistic hallucination.

Execution (operational control): Whether AI can move from demonstrations to controlled task execution, operating within defined rules, limits, and accountability frameworks. Interpretability is also becoming a procurement requirement in high-stakes domains such as defense. DARPA (Defense Advanced Research Projects Agency) currently has a mandate to make AI interpretable and actually to explain its outcomes.

Follow the Money

The trust debate also shaped how investors described capital allocation, with an emphasis on underwriting discipline and exposure to structurally durable demand rather than short-term model cycles.

Zhu said the concentration of innovation among giant public technology companies complicates long-term investment decisions for private equity, as scale gives hyperscalers an advantage that is difficult to challenge within a five- to seven-year horizon.

“A lot of the biggest innovations right now are driven by huge technology companies that are all publicly listed,” Zhu said. “It’s simply the scale. The market cap of the Magnificent Seven is in the trillions.”

He said frequent model resets make it challenging to build conviction in application-layer economics.

“Every month, every two months, there’s a foundational shift happening,” Zhu said. “Someone comes up with a new model that just completely wipes out everyone else.”

Newman said that volatility helps explain why capital is migrating away from thin applications toward infrastructure and enabling systems, where value is more likely to accrue reliably.

Several panelists pointed to compute, data centers, and related infrastructure as areas where demand is structurally linked to AI growth, regardless of which software stack ultimately dominates.

The panel also noted that AI-driven shifts in user behavior could have second-order effects on digital business models. Zhu said search and discovery are already fragmenting as users move toward conversational interfaces, a trend that could weaken the economics of traditional click-based advertising.

Who Builds Next

While infrastructure favors scale, panelists said AI is reshaping who gets to build at the application layer, as new tools lower barriers for certain types of founders.

Newman said the AI value chain is splitting between infrastructure, where economies of scale dominate, and applications, where speed and experimentation matter more. On the infrastructure side, he said, data and hardware advantages make incumbents hard to displace.

“The infrastructure, because of economies of scale—whether it’s hardware, data, or labeling—is concentrated in the very few,” Newman said. “For new incumbents coming into the space, it’s very difficult to disrupt the incumbents.”

At the same time, he said AI is enabling creative founders to move faster from idea to validation, even without deep technical backgrounds.

“What AI has enabled is for them to get towards an MVP (minimum viable product) or a POC (proof of concept) a lot quicker,” Newman said.

Sokolin cautioned that speed alone is not enough, urging founders to distinguish between meaningful experimentation and superficial products that fail to solve real problems. Several speakers agreed that many AI products prioritize fluent responses over operational depth.

Paveliev said that the gap reinforces the importance of trust and execution. Without explicit constraints and explainability, he said, even fast-moving products will struggle to gain enterprise adoption.

Together, the panel suggested that the next wave of builders will be defined less by speed and more by the ability to deliver systems that operate reliably under real-world constraints.

Sokolin, the moderator, pushed the panel to distinguish between complex problems and lazy products. He framed the market as a mix of “vaporware” and genuine experimentation, and suggested that differentiated outcomes can still appear in unexpected places.

Newman and Paveliev also converged on a shared risk: information quality. As AI-generated content increases, both suggested that trust becomes harder to maintain unless systems can anchor outputs to a verifiable source of truth.

Paveliev criticized probabilistic systems that search across web sources without interpretability.

“As LLMs produce more and more internet content, you’re effectively going to have LLMs eating their own lunch,” he said. “If it continues down that path of probabilistic hallucination, it will become a disaster.”

He said the biggest downside is epistemic.

“The worst outcome is that the source of truth will be completely lost,” he said. “It will be very difficult to decipher what is real and what isn’t real.”

Taken together, the panel’s discussion suggests that until AI systems can demonstrate predictability, explainability, and accountability at scale, trust—not technical capability—will remain the decisive factor shaping enterprise adoption and investment decisions.